I’ve split out this post from my main summary of The Most Human Human by Brian Christian. Click the link to find my the full summary for that book.

What is information entropy?

Formally, the amount of information is defined by the degree to which it reduces uncertainty. Claude Shannon coined the term entropy to to describe the value or density of information. Higher entropy means we need greater information content to reduce the uncertainty.

Most of us are intuitively familiar with the concept of information entropy (even if we haven’t heard the term). When you’re trying to find the passage in a Word document, you’ll hit Control + F and enter the most unusual word or phrase from that passage. (A common trick is to type “tk” in places you plan to revisit, as no English words contain “tk”.)

Entropy also focuses our attention and guides us on where to look. Studies have shown that when people read books, their eyes linger for longer on the more unusual and unpredictable words, while skipping over the predictable ones.

Biased systems are lower entropy

Entropy is highest when all outcomes are equally probable. For example, the results of flipping a fair coin contain more information than flipping a biased one. To report the results for a fair coin, you’d need to report approximately 50% of all results. But for, say, a heads-biased coin, you only need to report all the tails results, which will be fewer than 50% of all results. We can therefore compress the results of a biased coin more easily, which means it has less entropy.

Shannon letter guessing experiment

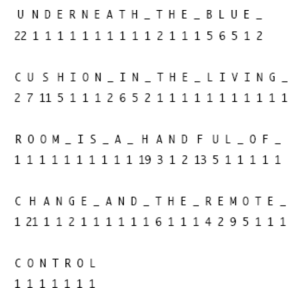

Shannon developed a letter guessing experiment/game to calculate the entropy of the English language. It’s a bit like hangman, but you guess only one character at a time. For example, if you guess “e” correctly, it won’t fill in all the “e”s in the sentence, only the one for that slot. You then note down the number of guesses you needed for each guess below each letter. More guesses means higher entropy.

The below picture is a screenshot taken from a game that Christian played. For the first character, he needed 22 guesses before he got the letter “U”. Once he did, he got each character for the rest of the word “underneath” on the first try.

[I’ve tried to find a version of this online to no avail. If anyone finds one, please let me know.]

Shannon found that the average entropy of a letter was between 0.6 to 1.3 bits, meaning that a reader can usually guess the next letter correctly around half the time.

“When we write English half of what we write is determined by the structure of the language and half is chosen freely.”

Shannon showed that, mathematically, text prediction and text generation are the same. Researchers now suggest that we’d have to consider a computer “intelligent” if it could play the Shannon game optimally. That is, a program that can perfectly predict what you write would be just as intelligent as a program that could write human-like responses to you.

Novels have more information than movies because they “outsource” more to the reader

Christian argues that although movie file is much larger than a file containing a novel, novels arguably contain much more information. This is because novels outsource all the visual data to the reader. A reader has to imagine what the characters and scenes look like, while someone watching a movie has it all given to them (hence the larger file size of movies). But these visual details usually don’t matter. So a novel arguably contains more information than a movie and therefore has greater experienced complexity.

This is why, at the end of a long day, you may feel too tired to read a book but not too tired to turn on the TV or listen to music. Novels make greater demands of their readers.