In this podcast summary, Will MacAskill talks about longtermism and his recent book What We Owe the Future. Will also wrote Doing Good Better, which I have summarised here.

The original podcast and full transcript is here on the official 80,000 Hours website. The original podcast is 2 hours 54 minutes (1 hr 56 minutes on 1.5x speed). The estimated reading time for this summary (excluding the “My Thoughts” section) is 42 minutes.

As usual with my summaries, I’ve rearranged things. The first part of the summary talks about longtermist ideas and arguments for and against. I then outline the more specific EA and longtermist causes covered at different points in the podcast. Finally, I put Will’s personal journey at the end. It was interesting but perhaps less relevant to people who are more interested in the longtermism ideas themselves.

Key Takeaways

Longtermism is the view that positively influencing the long-term future is a key moral priority of our time.

- Will’s version of it is relatively mild, as he’s not saying the long-term future is the key moral priority – he just thinks it’s important.

- Will is not saying longtermism is where we should spend all our efforts and money. But we currently spend probably less than 0.1% of total GDP on it, so we have room to do a lot more.

Longtermist ideas may sound weird in theory but longtermism as currently practised by EAs isn’t all that weird. Some arguments against longtermism, and Will’s responses to them, include:

- It’s too hard to affect the long-term future due to uncertainty and cluelessness. Will is very sympathetic to this objection. His two responses are:

- If you accept that most value is in the long-term future (because most people are in the future), this cluelessness objection really applies to everything.

- You can mitigate this by doing “robust” EA causes that look good on a variety of perspectives. Examples include: promoting clean energy, reducing risk of war, and promoting EA values.

- Prioritising the long-term future is too demanding when there are still so many problems today.

- This is a general demandingness objection that is not unique to longtermism. In theory, moral philosophy doesn’t have a good answer for what the right “balance” should be between prioritising today/ourselves vs prioritising the future/others. It tends towards all or nothing.

- In practice, however, we do so little for the long-term future that the issue is simply whether they deserve some efforts, not all our efforts.

- The idea that we should work on things that have tiny chances of providing enormous value is weird.

- Will accepts it may be unintuitive but notes that people are generally bad at reasoning with small probabilities.

- Moreover, the risks longtermists work on are not actually that small relative to the resources deployed to address them, especially when considered at a community level.

Some people have suggested it could be better to promote longtermist causes by appealing to people’s self-interest and simple cost-benefit calculations. However, Will thinks:

- the best longtermist causes are probably not the best causes on a short-termist or self-interested basis (though they can still be good causes on that basis); and

- it’s important to convey our goals accurately so that people can give feedback on things we might have gotten wrong (e.g. if there is information to suggest a particular risk is higher/lower than initially thought).

There are broadly two ways of affecting the long-term future:

- Extending the future of civilisation (e.g. reducing extinction risk); or

- Improving the quality of the long-term future (e.g. improving morals)

Which of these two we prioritise depends heavily on whether you think things are better or worse overall, or whether most people’s lives contain more happiness than suffering:

- These questions are extremely neglected, but are extremely important – not just to longtermism but even to things like public health measures.

- Will thinks the track record of suffering vs happiness has been unclear, partly because it’s unclear which beings we take into account.

- But he thinks the future trajectory looks good, mostly because there’s a large asymmetry between altruism and sadism. That is, most people are neutral, some are altruistic, but very, very few are determinedly sadistic.

Will personally took a long time to buy into longtermism, too. Particularly in its early days, longtermism seemed to involve mostly armchair philosophising, and Will wanted to do concrete things. Today there are more concrete longtermist causes such as pandemic prevention, reducing lead exposure, and investing in clean energy.

Detailed Summary

What is Longtermism

Will defines “longtermism” as follows:

Longtermism is the view that positively influencing the long-term future is a key moral priority of our time.

When people say “long-term” in ordinary usage, they usually just mean a few years or decades. Instead, Will is thinking about a potentially vast future, which could last millions or billions of years.

Some stronger versions of the above view are possible with people seeing longtermism as more important than just “a” key moral priority. But Will focuses on just this weaker version since he’s more confident in the weak form, and it doesn’t make much practical difference for now since we devote so few resources to it.

Relationship with Effective Altruism

Effective altruism and longtermism are separate ideas. Many people in EA focus on near-term issues, and are not longtermists. Similarly, there are people who focus on longterm issues but are not part of the EA community. Some people may focus on longtermist issues because they find it interesting, without claiming that it was one of the best ways of doing good. One example is The Long Now Foundation.

Arguments For Longtermism

According to Will, the core argument for longtermism is:

- Future people matter morally;

- There could be enormous numbers of future people; and

- We could make a difference to the world of future people.

To some degree, longtermism is just common sense. If our actions might harm someone, the fact that it might harm someone who isn’t born yet shouldn’t make our action more defensible. The most controversial plank of Will’s argument is probably the third one – that we could make a difference to future people. This is discussed below under Cluelessness makes improving the long-term future very intractable

Are there better ways to promote longtermist causes?

Rob questions whether the adoption of longtermism was (or is) slowed down by not using more common sense, cost-benefit arguments that appeal to people’s self-interests. [This is raised at both 0:26:44 and 1:02:07 but it’s a similar point so I’ve combined it here.]

Rob first refers to Richard Posner’s book Catastrophe: Risk and Response (2003) which lays out a “boring” common-sense case that humanity is vulnerable to big disruptions, and we’re not doing enough about that. Will accepts that it’s very possible such an argument could have sped up the adoption of longtermism. He also notes that early longtermism discussions had a certain “fringiness” that was neither helpful nor necessary. However, Will points out that, at least in theory, Posner differs from longtermism in two important ways:

- Posner focuses on catastrophes, which could kill just 10% of the population, rather than risks affecting the very long-term future; and

- Posner applies a discount rate, such that the future loses virtually all its value after just a few hundred years.

Later in the podcast, Rob says that people have recently argued that extinction risk during their lifetime is so high (like 10% or more) that people don’t even need to care about future generations to be worried about it. People who only care about themselves and those they know should also be concerned. Such people worry that the philosophical arguments for longtermism are distracting and undersell the case for caring about extinction risk.

Will acknowledges there’s an important role for people to point out that catastrophic risks from pandemics or AI are way higher than most people think. But he also believes that:

- Longtermist causes probably aren’t the best ways of doing good if you’re just concerned about the short term; and

- It’s important to convey our goals accurately.

Longtermist causes probably aren’t the best ways of doing good in the short term

The core idea of EA is that we want to focus on the very best things to do, rather than just very good things. Will points out that, on its face, it would seem extremely suspicious and surprising if the best thing we could do for the very, very long term is also the very best thing we can do for the short term.

If we’re just concerned about what happens over the next century, then reducing extinction risks and promoting AI safety may still be very good things, just not the very best things. (But they will be if we take a much longer time horizon.)

For example, Will thinks he has about a 20% chance of dying of cancer, whereas he thinks the risk of misaligned AI takeover is only about 3%. And even if there is a misaligned AI takeover, he thinks there’s only about a 50% chance that will involve killing all humans, rather than just disempowering them.

It’s important to convey our goals accurately

Will thinks that we generally get better outcomes if people understand what our goals are. We could get information that biorisk is higher than we thought, or that AI risk is higher or lower than we thought, or that some new cause area is even more important.

The environment can change or information can change. If the EA community just told people “Adopt this particular policy,” without explaining the ultimate goals, that could lead people to do wrong things when things change.

Arguments Against Longtermism

The arguments against longtermism discussed in this podcast are:

- Nihilism – it’s possible the world is just entirely meaningless. The podcast doesn’t really expand on this.

- Cluelessness makes improving the long-term future very intractable;

- Tiny chances of enormous value are unintuitive;

- Sacrificing present wellbeing for future generations is too demanding; and

- Longtermism might be used to justify atrocities.

The last three arguments are all variants of a “fanaticism” concern that arises if you think all the value is in the long-term future. However, the counterarguments to each of these is different.

Cluelessness makes improving the long-term future very intractable

[This point is actually made in two different parts of the podcast: at 00:42:28 (re: crucial considerations) and 01:47:27 (re: tractability). I think the underlying point is the same – or at least similar – so have combined them here.]

A common objection is that, because longtermists are trying to have impact so many years into the future, there aren’t good feedback loops to work out if their acts are helpful or harmful. This makes longtermist causes much less tractable than, say, improving the health of people around today where feedback loops are much more rapid.

Will and Rob agree that this is a very important objection. Will also points to the fact that many important insights (e.g. probability theory, population ethics, the idea that the future might be enormously large) were made relatively recently, so we could be missing some crucial considerations. For example, in the 19th century John Stuart Mill and other utilitarians argued that we should keep coal in the ground so that future generations will have energy. At the time, they had a really bad understanding of just how much coal there was. In fact, if they had succeeded, it likely would’ve been harmful to future generations as it would have slowed down the Industrial Revolution and set back technological progress.

Will really has two responses to this:

- The first is to point out that this cluelessness objection really applies to everything.

- The second is to pursue “robust” EA.

First response to cluelessness: it applies to everything

If you accept that most value is in the future (because most people are in the future), the cluelessness objection actually applies to everything – including things motivated by short-term benefits. You might get feedback loops on whether bed nets work, but saving someone’s life with bed nets also has very long-term consequences and we don’t get feedback loops on those. [Hilary Greaves made the same point that cluelessness is not an objection to longtermism.] Some people might say it all washes out, but that’s a claim, and actually quite a bold one.

It seems reasonable to assume that, on average, well-meaning people who really try to reason about things are doing a neutral or better job of affecting the long-term future than people who don’t. It seems implausible that people trying to improve the long-term future and relying on evidence to do so, are systematically making it worse. In general, if you know someone is trying to achieve X, it makes X happening more likely, because you know there’s an agent trying to make X happen. Similarly, while evidence can sometimes lead to wrong beliefs, on average it tends to make your beliefs more likely to be true than false.

Second response to cluelessness: robust EA

Instead of focusing on things that seem optimal on one perspective, robust effective altruism looks for things that seem good on a wide variety of perspectives.

Although Will contrasts “robust” EA with longtermist EA, the two overlap. You might want to do robustly good actions if you’re not sure whether longtermism is true, or if you think longtermism is true but are not sure what the best ways to promote the long-term future are.

Three examples of “robust” EA include: clean energy; reducing the risk of war; and growing EA. These are discussed below under Specific Longtermist Causes.

Tiny chances of enormous value are unintuitive

If there’s so much value in the long-term future compared to in the short-term, that could suggest you do some really extreme things with negative short-term consequences, just to slightly improve the odds of the long-term future going well.

Will has several responses to this concern:

- The unintuitiveness of this is a general problem in decision theory. The fact that tiny probabilities of enormous value go against our intuition is a general problem in decision theory. There’s no real answer to that. It turns out you can’t really design a decision theory that avoids this result without it having some other very counterintuitive implications.

- In practice, the risks longtermists work on are not that small. People are generally very poor at reasoning about probabilities. Will estimates the probability of extinction from an engineered pandemic in this century at about 0.5% and he thinks the risks from AI are much higher. For comparison:

- The chance he’ll die in a car crash in his lifetime is also about 0.5% (1 in 200).

- The probability of dying in a one-mile drive is about 1 in 300 million, yet most people still wear seatbelts on such drives.

- The chance of a single vote swinging an election is about 1 in a million (in a smallish country) or 1 in 10 million (in bigger countries if you live in a swing state).

- Actions that require more than one agent should be considered at a community level rather than an individual level. This is a general issue for decision theory, not specific to longtermism. For example, we may agree it makes sense to attend a protest that has a 10% chance of effecting good policy change, even if the probability of making a difference so low as to not be “worth it”. Otherwise, we’ll get weird results where you’d say the climate change community as a whole should be doing this protest, but every individual is wrong to participate.

Sacrificing present wellbeing for future generations is too demanding

Some people argue that if there’s so much value in the future, longtermism would suggest the right thing to do is to prioritise them even if that completely impoverishes the current generation.

Demandingness is not specific to longtermism

Will points out this “demandingness” objection is not specific to longtermism. It also arises in the classic morality arguments raised by Peter Singer. Even after you’ve given away 90% of your income, you’re still much better off than the poorest people in the world so arguably should keep giving more. Moral philosophy doesn’t really say what the “right” middle ground is – it’s hard to get a philosophical principle that ends up at either all or nothing.

A similar issue arises when considering whether you give more weight to current generations than to future ones. Or, put another way, whether you discount future generations. There are some plausible arguments for discounting such as you have special relationships with people in the present and they have also given you benefits that you should repay. But it turns out that if you discount by any number, you essentially give them no weight at all. Yet if you don’t discount them at all, then they’re of overwhelming importance – you become hostage to potentially vast numbers of future people. So there’s just no satisfactory middle ground in theory.

[I’m not sure that if you discount by any number, you give future generations no weight. I think you will give some weight to proximate future generations like your grandchildren and great-grandchildren, but no weight to generations in the very long-term future. That is probably how most people currently weight future generations, so it doesn’t seem completely crazy.]

A “buckets” approach doesn’t really help

Rob suggests a “buckets” approach whereby you separate out different classes of moral concern. For example, one class may be an impartial class for others (including future generations); another class may be for people around today; and a third class may be for people we know. Since the different classes have motivations, arguably each should get some significant fraction of our resources and can’t just be traded off against the impartial class.

However, Will points out that this approach ends up being completely insensitive to the stakes involved. For example, say you decided to spend 33% of your resources on impartially making things go better. Then some new emergency situation arises where the future will become a dystopian hellscape unless you spend an extra 1%. Obviously that matters but Rob’s “buckets” approach doesn’t really allow that. So you end up having to choose between the potential for fanatic demandingness, or the potential for complete indifference to the harm that will be done if you don’t act, with no middle ground.

In practice we’re not there yet

In practice though, the idea of society dedicating all its resources to improving the long-term future is ridiculously far off. The current argument is simply whether we give future generations some concern (as opposed to none at all). Will estimates that we spend less than 0.1% of global resources in making the long-term future go better. We can reassess after bumping that up to, say, 1%.

He does expect that at some level the best things we could do may just be to build a flourishing society generally, rather than anything targeted to the long-term future – but we’re currently far from that level.

Longtermism might be used to justify atrocities

Throughout history, there have been cases where people with a utopian vision of the future have used that vision to try and justify atrocities. The attempts to get to that utopian future have always backfired and ended up making things worse.

Will distinguishes this idea of utopianism, which he thinks is dangerous, with general concern for the long-term future. Most longtermists don’t actually have a vision of a utopian future, other than this kind of vague sense that it might be really good. But even if you have a utopian vision, that does not mean the ends justify the means.

There are two major camps in moral philosophy: consequentialism and non-consequentialism. A key difference between the two is that consequentialism is not subject to “side constraints” – the only relevant consideration is the outcome you produce. Non-consequentialism on the other hand says that the ends don’t always justify the means. For example, even if one person’s life could be sacrificed to save 10 people, non-consequentialism may say you shouldn’t sacrifice them because the right to life acts as a side constraint.

In cases of moral uncertainty, you should give weight to both consequentialism and non-consequentialism. If it’s a case of sacrificing one person to save two others, consequentialism says you should do that but non-consequentialism would say you shouldn’t. How you actually aggregate or trade-off between the two is tricky. But you should still give some weight to non-consequentialism, which will often mean you don’t act in a way that will do the most good.

Overall Rob thinks it’s a good thing that the horrific history of utopianism has put people off the idea that you can use consequentialism to justify atrocities. But Rob and Will also think it would be nice to have some more positive strains of utopian thinking to offset some of the more common dystopian visions. In fact, Will has a side project called Future Voices, which features short stories of future people, and involves authors such as Ian McEwan, Jeanette Winterson and Naomi Alderman.

Different EA Cause Areas

At a high level, there are two categories of longtermist cause areas:

- Extending the future of civilisation (e.g. reducing extinction risk and thereby increasing lives that could be lived); and/or

- Improving the quality of the long-term future (e.g. by improving the values and ideas that guide future society, preserving information or preserving species).

Some causes, such as those falling under “robust” EA (see below) may fall under both of these, and may also be good from shortermist perspectives too.

Extending the future of civilisation

The podcast doesn’t really talk all that much about extinction risk causes. Two of the cause areas I’ve included here – artificial intelligence and nuclear war – may fall better under “Improving the Quality/Values of the Long-Term Future” (according to Will, anyway).

Pandemic prevention, focusing on the worst pandemics

80,000 Hours has been recommending this as a career area since 2014 (it’s not just because of Covid). The reason it is such a concern is that developments in synthetic biology have made it possible to develop pathogens could make Covid-19 look like child’s play, and literally kill everyone on the planet.

In this area, the EA community is working on:

- Far-UVC technology. This is basically sterilising a room by shining a high-energy light. If this were implemented as a standard for lightbulbs, humans could potentially be protected from all pandemics and respiratory diseases. It’s still early stage though.

- Early detection. Currently we don’t screen much for novel pathogens. But in theory we could be constantly screening wastewater for things that look like new viruses or bacterial infections.

Artificial intelligence

When we get general AI (i.e. AI that is good at a wide array of tasks), it could start improving itself. This could cause technological progress to advance far faster than it currently does. Humans are currently bottlenecked by the size of our brains, but computers would not be bottlenecked by the availability of computing power.

So once AI reaches around a human-level of intelligence, that could be a turning point where they start advancing much, much faster than we can. If the values that AI systems care about are not aligned with ours – e.g. if they don’t care about things we don’t – humanity could be completely disempowered. [Will points out that even if a misaligned AI kills all humans, civilisation would still exist – just the AI’s civilisation rather than ours – and that would still have some moral value. I think he has a fair point but I’ve put this under the Extending the Future of Civilisation heading as there’s quite a lot under the Improving the Quality of the Long-Term Future heading already, and people often talk about misaligned AI as an extinction risk.]

There is therefore a risk that, if general AI develops soon, it could lock-in whatever arbitrary values we happen to believe at that time, even if those values are wrong. That suggests there’s a role to try and fix the worst ideas people hold now, so we don’t accidentally lock them in. Will refers to Nick Bostrom’s idea of differential technological development, which is that you want some technologies to develop before other ones. For example, you want AI systems that can model things really well without being “agents” (e.g. Google Maps) before you want agentic AI systems that actually do things (e.g. play StarCraft).

Nuclear war

Risks from nuclear war may also be linked to risks of misaligned AI.

For example, general AI seems much more likely to go well if developed in a liberal democracy than in a dictatorship or authoritarian state. If a nuclear war wipes out most of the liberal democracies in the world, and humanity eventually gets back to the level of being able to develop general AI, what’s the chance that liberal democracy will be the predominant way of organising society at that point? Will guesses around 50-50. He thinks this is a huge, underrated downside of nuclear war.

So it’s plausible that, when you look to the very long-term future, this may be a bigger risk from a nuclear war than the chance of extinction.

Improving the quality of the long-term future

Rob raises a couple of arguments against working to improve the quality of the long-term future. These are:

- Perhaps we would converge to the “right” values anyway, such that we don’t need to actively work on this;

- Our moral views today could be wrong; and

- Promoting moral values could be perceived to be hostile (compared to reducing extinction risk, anyway).

They also talk about the risk of stagnation, which is one issue relevant to the quality of the long-term future.

Would we converge to the “right” values anyway?

Rob suggests that if humanity survives for a really long time, perhaps the “right” values and arguments will ultimately win out anyway, such that we don’t need to work on trying to improve the quality of the long-term future.

Will says it’s something we should be open to, but he’s not optimistic about it. He thinks it’s very hard for society to figure out what the “right” thing to do is, and it might require a very long period of peace and stability to get there. Moreover, once people get into positions of power, they tend to just try to implement their existing moral views or ideology. They don’t tend to hire advisors and moral philosophers to examine whether their existing view is correct.

Some examples suggest our values today are contingent on past decisions, and point against the convergence hypothesis. Moreover, things that look like convergence are actually the result of a single culture becoming very powerful, and then getting exported across the world. Examples discussed include:

- Vegetarianism. Attitudes to diet and animals vary a lot from country to country. If the Industrial Revolution had happened in India instead of Britain, we may view factory farming very differently today.

- Monotheism. There doesn’t seem to be any reason why monotheism is so much more popular than polytheism. Rob points out people sometimes argue monotheism could be better for cooperation or more persuasive somehow. But he thinks the effect can’t be that strong since there are many places – Japan, China, the Indian subcontinent, and the Americas – which didn’t adopt monotheism until very recently. [Sapiens suggests that monotheism tends to be a lot more fanatical and missionary, since they believe only they have the message of the “true” God. Whereas polytheism tends to be a lot more tolerant of other gods. This seems plausible to me, and does tend to support a sort of convergence – though it’s perhaps more a case of a single culture becoming dominant and exporting its values, rather than different cultures converging to the same “right” answers. The growth of atheism is also a counterpoint to this. I wonder if we might converge to atheism over a longer timeframe?]

- Monogamy. Monogamous societies were not the norm across history, but have become dominant in recent years. This has been because of Western cultures promoting monogamy through colonialism and some imitation.

- Abolition of Slavery. Will thinks the argument for this being contingent – i.e. it could have gone either way – is much stronger than one might think. The idea that abolition of slavery was economically inevitable is not favoured among historians today. Abolitionist views were not prevalent outside of Britain, France and the US. For example, the Netherlands was an early modern economy but had almost no abolitionist movements. If the Industrial Revolution had happened there (which it easily could have), slavery may still be widespread today. Will talks about this in more detail in What We Owe the Future, and in his last 80,000 Hours podcast.

What if our moral views today are wrong?

Rob points out that the above discussion makes everything seem very arbitrary, including our moral views today. We’d think it was bad if past people locked us into their moral views, and if our moral views today are arbitrary, it would also be bad to lock future generations into those same views.

Will agrees and refers to the following thought experiment. Imagine our current levels of technology in ancient Rome: 10% of people own slaves, elites watch people die in the Coliseum for fun, women have no rights, cosmopolitanism (the idea that all humans are part of a single community) and moral impartiality are not ideals. What if we then developed artificial general intelligence and it reached a point where digital beings gained sentience? Given the widespread acceptance of slavery, it seems plausible that the vast majority of beings in that world would be slaves. That would be pretty scary.

The question then is, how much better are we than the ancient Romans? While Will thinks we’re better than them, we still seem very far away from the best moral views. And if we – even well-meaning people – lock future generations into our unreflective views, it could be very bad.

Reasons for thinking the future might be better

Will suggests the following as the strongest arguments against his view:

- The moral truth may be a strong attractor;

- Empirical facts may be clearer in the future; and

- Technological abundance may allow for more gains from trade.

[I don’t see these as being counterpoints to the view that our current moral views may be arbitrary and wrong. These just suggest that the future might be better, but they don’t really address the concerns above that our current moral views may still be bad, such that locking future generations into those views would also be bad.]

The moral truth may be a strong attractor

If there’s no such thing as a moral truth, then it doesn’t matter anyway. But if there is a moral truth, we might expect future beings to be closer to it, just like they are likely to be closer to truth in chemistry and physics.

Empirical facts may be clearer in the future

If the future is more technologically advanced, people will have better knowledge of empirical facts. This will mitigate injustices to the extent that those injustices are based on bad empirical facts (e.g. racism based on a belief that some races are intellectually inferior).

However, Will doesn’t think most moral disagreement is empirically based at all – people usually come up with empirical reasons to justify their moral views, rather than the other way around.

Technological abundance may allow for more gains from trade

With more technological progress, we can get much greater gains from trade. Even if most people are selfish and largely indifferent to others’ wellbeing, at least some people in the world will be altruistic. Those altruists may then be able to persuade others to do things that make people happier, if future technology makes it very cheap and easy to do that. Moreover, there is unlikely to be an offsetting number of sadistically motivated people so if you combine indifference with some altruism, you’ll get an okay situation.

[This argument about the lack of sadistically motivated people strikes me as simplistic – I explain further in my thoughts below. However, I do find convincing the suggestion that wealth and abundance will make it easier to be nice to others in the future. The development of meat substitutes has made it easier to be a vegetarian and I believe that will only increase as those technologies improve.]

Is promoting moral values hostile?

Rob thinks that to date, there seems to have been much more focus on extinction risk than on improving moral values. (Will counters that he would put AI safety in the “improving moral values” bucket rather than the “extinction risk” bucket because even if a misaligned AI takes over and kills everyone, the AI’s civilisation still continues – and that still has moral value.)

A reason Rob suggests for this may be that trying to convince other people to have “better” values (which in practice will mean the speaker’s values) feels more hostile than trying to prevent an extinction risk. Will’s response is that it depends on the methods used to promote values. Brainwashing or tricking people into your moral views is definitely hostile, but other methods are not. For example, just making arguments and writing books to try to change public opinion – like the abolitionists did – doesn’t seem hostile.

Stagnation

This is the idea that, even if there’s no global catastrophe or anything, we could see technological progress and economic growth stagnate for an extended period of time. Will thinks there’s about a one in three chance of a tendency towards stagnation over the course of the century.

Why might stagnation happen?

Will refers to a paper that suggests good ideas seem to be getting exponentially harder to find. Einstein developed his theory of relativity in his spare time as a patent clerk. Now, breakthroughs in physics require multibillion dollar equipment (like the Hadron Collider) and large teams of people. And discovering the Higgs Boson is pretty minor compared to Einstein’s theories of relativity.

At the same time we’ve been devoting more and more people (both in absolute terms and as a percentage of the population) to research and knowledge work. So far that increased investment in research and innovation has given us fairly steady economic growth and has helped keep Moore’s law going. However, global populations are expected decline eventually as fertility rates fall below replacement levels. (Will says projections are that the global population will plateau at around 11 billion.) In addition, the proportion of the population we devote to research and innovation has a cap – it can’t ever exceed 100%, and the actual cap is probably a lot lower than that.

Rob also points out that for most of human history – during our hunter-gatherer and most of the farming era – growth rates were very low. Then the Industrial Revolution created enormous growth. But many have since argued that from about 1970 on, the rate of productivity growth has declined relative to what it was between 1800 and 1970. Economists’ measures of total factor productivity have shown consistent downward trends for the last 50 years or so. Will says he’s sympathetic to these suggestions that we’re already in a stagnation situation, but he points out that’s not essential to his argument about stagnation risk to work.

[Note that this discussion is talking about declining growth in frontier countries – i.e. the most advanced economies. While some countries like China have shown much higher growth rates, that is “catch-up” growth. Economists say that catch-up growth can be driven just by increased capital investment (so can be a lot higher) instead of by new inventions.]

Why do longtermists care about stagnation over the next few decades or centuries?

Will suggests two reasons:

- Increased extinction risk. If we get stuck in a period of heightened extinction risk, that increases total extinction risk. So if our extinction risk from engineered pathogens is, say, 0.1%, and we never manage to reduce it, then over hundreds or thousands of years, that adds up to quite a big total risk.

- Liberal democracy may be at risk. If you think that values like liberal democracy today are pretty good, and you think that those values are fragile, then chances are that society’s values will worsen over a period of long stagnation. Will says he is agnostic on this question – he just wants to point it out as an important consideration, but he’s not confident on the sign.

Rob asks if this argument is the same as the one made by Benjamin Friedman in The Moral Consequences of Economic Growth. However, Will says that Friedman’s argument is basically that, when the economy’s growing, people are happy and co-operative in order to grow the pie together. But if the economy is stagnant, people start behaving in worse ways to simply increase their share of pie.

Friedman’s argument differs from Will’s in that Friedman is talking about how stagnation could lead to collapse of values in a timespan of years or decades. Will’s is not that stagnation itself will lead to values collapse, but merely that the passage of a long period of time could make things worse. [I found this bit hard to follow because it seems like Will’s point about the fragility of liberal democracy holds regardless of whether there is stagnation. Maybe the claim is that if there continues to be moral progress, that reduces the chance of “good” values like liberal democracy disappearing? But the previous discussion about stagnation focused on technological progress – like in physics and computing – rather than moral progress. So I don’t quite follow the reasoning here – I found Friedman’s explanation more compelling.]

What could stop stagnation?

Will and Rob discuss three ideas:

- Speeding up AI (but that has other risks). This is a very difficult question, with lots of factors on both sides. Stagnation is one factor suggesting AI be sped up (or at least not slowed down). The idea is that AI could do its own R&D, which would be the main way out of stagnation again.

- Increasing opportunities for people in poverty. Many people around the world cannot meaningfully contribute to the frontier of research because they’re born into poverty. Increasing opportunities for those people could help reduce stagnation risks. This could take the form of talent scouting programmes – like in sports, but for professional researchers instead.

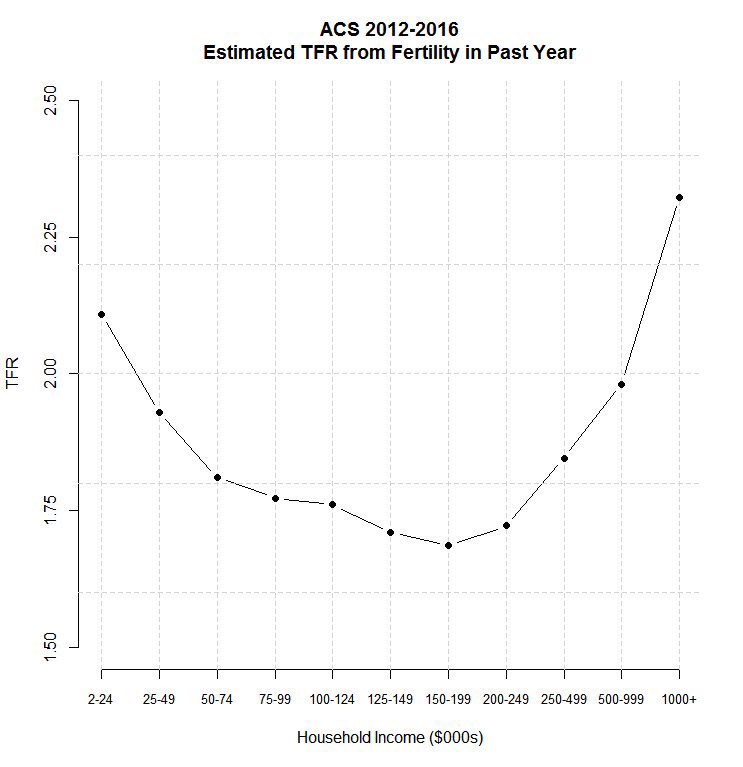

- Increase population size. Rob refers to the below graph that plots household income against fertility. It goes down initially (presumably as people get more career focused) then goes back up when people start getting very wealthy (presumably when they have the financial slack and leisure time to have the number of children they actually want). However, Will points out it’s very hard to encourage people to have more children. Hungary apparently spent 5% of its GDP trying to do this, and only moved the fertility rate from 1.5 to 1.7.

Will understands that the standard models suggest we get an extra century of technological progress before things plateau, unless we get to automated R&D by then. He thinks there’s a pretty good chance we’ll get to automated R&D by then, but it’s certainly not guaranteed. Longtermists may therefore want to look at things we could do to increase the chance of getting there.

Should we reduce extinction risk or improve the quality of the long-term future? It depends on whether the future is expected to be good or bad

The question of whether the future is good in expectation is relevant to whether you prioritise extinction risk or improving values. If you thought that on our current trajectory, we were more likely to do more harm than good and keep causing suffering, you might be relatively indifferent to extinction.

To answer the question of whether the future is expected to be good, we should consider:

- our track record to date; and

- the expected future trajectory.

Track record to date: unclear

Will thinks that to date, it’s unclear whether things overall have been good or bad, but it’s probably good. Reasons why this question is difficult to answer include:

- Which beings do we take into account? Almost all beings are roundworms or nematodes, but it’s unclear what a nematode’s life is. If we just look at vertebrates, then 80% of all life years that have ever lived are fish, and about 20% are amphibians and reptiles. (Humans are less than one-thousandth of one percent.) Fish have terrible suffering, but their deaths are very short, and their lives are probably close to neutral overall. So if we look beyond humans, life to date has probably been close to zero. [I think the vast majority of people outside the EA community would focus only on humans, and even in the EA community, I think most people would give greater weight to humans. Would be interested to know what Will’s answer to this would have been if we only took humans into account.]

- How “good” does a life have to be for it to be “good”? Many views of population ethics say that in order for adding a being to the world to be good, it can’t just be above zero — it has to be sufficiently more than zero. The reason for this is to get around Derek Parfit’s Repugnant Conclusion. Depending on where that threshold is, you may well conclude that life to date has been strongly negative, because relatively few beings are going to be above this threshold. [I didn’t think this Critical Level View was a particularly common one in population ethics, since it’s clearly arbitrary where that threshold is. This is the first time I’ve heard that view taken seriously.]

- Should we take into account objective goods? We may also want to consider “objective goods” that are not just about wellbeing. Objective goods may include things like knowledge or complexity, which may have value in their own right. Will finds such a view intuitive, but he’s also sceptical of them. One reason is that he doesn’t know how you would possibly weigh them against other things.

Future trajectory: positive

However, if you look at the trajectory and the mechanisms underlying that, Will thinks the future looks more positive. He draws a distinction between moral “agents” and moral “patients”:

- Moral agents are beings that can reason and make decisions and plans – e.g. humans. The trajectory to date for agents has been getting better and better, especially since the Industrial Revolution.

- Moral patients are beings that are conscious but cannot control their circumstances – e.g. other animals. The trajectory to date for patients is less clear. There are far fewer wild animals than previously, but people have different views on whether wild animals’ lives are good or bad. There are many more animals in factory farms and their lives are worse. [Seems like the trajectory to date has been worse overall, then.]

Until there were humans, basically everyone was a moral patient. The fraction of all conscious experiences being had by agents is therefore going up gradually from a starting point of zero. Will thinks if you extrapolate into the future, there will be more moral agents than moral patients, and moral agents’ lives tend to be good. This makes sense, because moral agents want their lives to be good and they’re able to change it.

This goes back to the asymmetry point above in that some agents will systematically pursue things that are good, but very, very few agents will systematically pursue things that are bad. As a result, Will believes there’s a strong asymmetry where the very best possible futures are somewhat plausible, but the very worst possible futures are not. He accepts that it’s entirely plausible that we squander our potential and bring about a society that’s not the very best, but he finds it much, much less plausible that we bring about the truly worst society.

[I don’t find this argument very convincing for reasons explained below under My Thoughts. I also think that it’s largely irrelevant whether a “truly worst” society is plausible. It makes more sense to focus on the respective likelihoods of all “worse than existence” and all “better than existence” societies, rather than just the likelihoods of the best and worst case scenarios.]

Questions about whether things have been good or bad overall are incredibly neglected

Questions such as whether things have been good or bad up until now, or whether most people’s lives are worth living, seem fundamental to understanding existence.

Even outside of longtermism, these questions are incredibly important. If people’s lives are below, or close to, zero, we should focus on improving wellbeing rather than increasing life expectancy. The standard economic method of doing cost-effectiveness analysis, the quality-adjusted life year, assumes that death is the worst state you can be in – i.e. zero. But if some people think their lives are worse than zero, we should really focus on improving their quality of life rather than on extending their lives.

Rob and Will therefore find it completely crazy that no academics or philosophers really focus on this question, and that it’s not a real discipline. There are no published studies on the question of how many people’s lives are above zero, but there are two unpublished ones:

- There’s one unpublished study by Joshua Greene and Matt Killingsworth which looks at it, focusing on people in the US. [They don’t mention what that study found.]

- Another commissioned study [commissioned by EA it seems] was done by Abigail Hoskin, Lucius Caviola, and Joshua Lewis. That one just directly asked people in the US and India questions like “Do you lives contain more happiness or suffering?”

- In the US, about 40% said it was more happiness than suffering while 16% said their life contained more suffering than happiness.

- In India, only around 9% said they had more suffering than happiness.

Robust EA causes

As explained above, these are causes that may not be optimal from a longtermist perspective, but are robustly good across a range of different perspectives. If you’re not sure whether longtermism is true, or if you think longtermism is true but are not sure what the best ways to promote the long-term future are, then robust EA could be an answer.

Some robust causes discussed in the podcast are: clean energy; reducing the risk of war; growing EA; and reducing lead exposure.

Clean energy

Will describes clean energy as a “win-win-win-win-win-win”:

- Fossil fuel particulates kill about 3.5 million people per year. The EU loses about a year of life expectancy just from that. So speeding up the transition away from fossil fuels would be worth it just for health gains.

- Clean energy would mitigate risks and harms of climate change.

- Investment in clean technology and innovation speeds up technological progress. This may help avoid the chance of long-term stagnation.

- Clean energy would reduce energy poverty in poor countries.

- By keeping coal in the ground, clean energy could help humanity bounce back more quickly in the event of some global catastrophe. (This is a more esoteric reason.)

- Clean energy could reduce reliance on authoritarian or dictatorial countries. There’s an idea of a “resource curse” in economics whereby countries with a lot of fossil fuels may be more vulnerable to dictators. This is because a dictator could take power and fuel themselves (literally) by selling those resources, without being responsive to the public at all. In contrast, if a country relies economically on innovation produced by its people, it’s much harder to be authoritarian. Instead, there would be an incentive to educate your people and make sure the population more broadly is flourishing.

The only reason Will could think of for why clean energy might be bad is that if you thought economic development in general is bad. So you could argue that technology is progressing at a much faster pace than our societal wisdom. Perhaps, then, we want technological growth to progress more slowly in general, to give moral reasoning and political development time to catch up. But Will doesn’t think that’s true.

Reducing the risk of war

Will thinks that reducing the risk of war is robustly good is because war seems to exacerbate many other risks, such as:

- risks of worst-case pandemics from artificial pathogens;

- developing AI too fast to try and beat the other side;

- risks of a global dictatorship; and

- risks from unknown unknowns.

A plausible explanation is that people may be more likely to do reckless things during a war. For example, during the Manhattan Project in WWII, they tested out a nuclear bomb even though some physicists thought there was a chance that it would ignite the atmosphere and kill everyone. If they hadn’t been at war, they may not have taken that risk.

Growing EA

Will argues it is robustly good to build a community that is morally motivated, who care a lot about the truth and engage in careful reasoning to try to find it, and are willing to respond to new evidence. The last one is particularly important, as future EAs could come up with new insights that are much better than the current community’s. So as long as EA can preserve those virtues, it looks robustly good.

Reducing lead exposure

[This was actually discussed in the part of the podcast talking about Will’s donations, rather than the part talking about robust EA. Will also describes it as a “very broad” longtermist action, rather a robust action, but I think it fits here.]

Basically, lead exposure is really bad. It lowers people’s IQ and general cognitive functioning, and there’s some evidence that it may increase violence and social dysfunction. Will thinks that it broadly seems like it would be better if people don’t have mild brain damage from lead exposure, lowering their IQ and making them more impulsive or violent.

Will has recently donated to Lead Elimination Exposure Project (LEEP), a new organisation incubated within the EA community. LEEP tries to eliminate lead exposure from things like lead paint. For example, they’ve gotten [or are getting? Unclear] the Malawi government to enforce regulation against lead paint. Will thinks that LEEP is in real need of money and further funding, in a way that some of the more core, narrowly targeted longtermist work where grant-makers have seemed to focus on.

Will’s Personal Journey with Longtermism

Will personally took a long time to get into longtermism. He first heard about longtermism in 2009, from Toby Ord (his co-founder of Giving What We Can). Back then, far fewer people had bought into longtermism and the activities being discussed were a lot more speculative. Some people foresaw the major risks (e.g. AI and bio-risk), but did not really know what to do about it. Will goes so far as to say that, when he first heard about longtermism, he thought it was “totally crackpot”. About 3-6 months later, he started thinking it wasn’t completely crackpot.

In 2014, Will wrote Doing Good Better (see my summary here). If he’d had free rein, he would have put global health and development, animal welfare, and existential risk on an even playing field. However, especially as it was his first book, the publishers didn’t want to make it too “weird”. (They also pushed back on including more on animal welfare.) It was only after Doing Good Better was done that Will felt he could focus more deliberately on longtermism.

He remembers 2017 in particular because AlphaGo (a computer program that could play the board game “Go”) appeared in 2016.There was much discussion then of when we might reach human-level AI. Some people were arguing for a 50% chance AI could reach human-level intelligence within 5 years. Will was not particularly convinced of those ultra-short AI timelines, but they led to him looking into other things. That then made him think he just wanted to dive in and figure it all out for himself.

Why Will was not initially convinced

Will found it intuitive to care about future generations. He also bought into the idea that the future is vast, such that most of the moral value was in the future, fairly early on.

The reason he was not intellectually convinced about longtermism was because he saw extinction risk as being the same as existential risk. This had several implications:

- Extinction risk depends on some tricky assumptions about population ethics, and he wasn’t convinced about those.

- Reducing extinction risk is only good if the future is net positive. Although Will has always thought that is true, he was not certain of it.

- We may not really know how to reduce extinction risk. It may be that the best way to improve the long-term future is to do things that are also good in the short term (e.g. increase economic growth, improve education, making democracy work better), rather than focusing on extinction risk.

Will still thinks his early intuition was largely correct in that, back then, the tractability of longtermist causes was low. Moreover, he thinks that there was a lot of value in just building up a movement of people who care about doing good in the world and who try to approach that in a careful, thoughtful way. There are many, positive, indirect benefits from just doing something impressive and concrete (in addition to the benefits from saving thousands of lives) – even if there are theoretical arguments that other causes should be given more priority.

Looking back, Will has been surprised at how influential the ideas of early longtermist thinkers, such as Nick Bostrom, Eliezer Yudkowsky and Carl Shuman, have been. He was also surprised by the impact of the book Superintelligence – he expected it would sell maybe 1,000 to 2,000 copies, but it actually sold 200,000.

What changed his mind

The things that changed Will’s mind were:

- The philosophical arguments became more robust over time; and

- Empirically, longtermism has moved off the armchair and into more practical areas.

Philosophical arguments becoming more robust

Difficult questions in population ethics – such as whether it is a moral loss to fail to create a happy person – have become less relevant for longtermism. There are longtermist things we can do that aren’t about increasing the number of people in the future, but about improving the quality of the future (which will likely be very long in expectation).

As mentioned above, Will had initially thought that speeding up economic growth might improve the long-term future. However, he now thinks that at some point economic growth will plateau. So we might be able to do things that help us get to that point faster, but either way, we’ll get to the point in the next thousands of years. Whereas there are very few things that would actually affect the very long-term future (i.e. millions or trillions of years) – and those are things like AI and pandemic prevention.

Longtermism moving off the armchair

In more recent years, there has been a lot of progress and learnings about pandemic prevention and machine learning. For example, we can now test if AI models exhibit deceptive behaviours. So there now seem to be a number of concrete things we could get some traction on.

Rob points out that if some ideas seem really big and important in theory, but there’s no way of applying them in practice, then we’re just always going to want to decide not to let those theoretical ideas determine our direction. Will agrees – he points out it’s very easy for academic research and ideas to just get lost in the weeds, even if they feel like they have practical relevance.

For example, there’s a lot of philosophy about inequality and whether there is a disvalue of inequality. In practice, however, it doesn’t matter because inequality is currently so vast. So it doesn’t really matter whether you simply add up benefits to people or whether you also give extra weight to the disvalue of inequality itself. [I think what Will means by “add up benefits to people” is that there are diminishing returns to wealth and most other things. Reducing inequality by shifting assets from the rich to the poor would therefore improve total welfare, even if you don’t care about inequality itself. ]

Similarly, with global catastrophic risk – on one hand, it is practically relevant whether we increase spending on such risks by 30x or 70x. But on the other hand, it’s not practically relevant because we are so far from the optimal level of spending that just doubling it would already be a huge improvement.

Will thinks this is one of the most common misunderstood points about longtermism. When he goes around saying that we should invest more in pandemic preparedness or AI safety, he’s not saying that we should spend all our money and efforts on it. Just more, relative to where we are now. Will admits that he doesn’t know when the stopping point will be – there may well be a point where the best targeted opportunities for improving the long term future are used up, such that the best longtermist actions are just things we should do generally to build a flourishing society. We may hit that at 50%, 10%, or possibly even 1% of our current resources. But given what the world currently prioritises, Will’s argument is simply that we should care more about future generations and the millions of years in the future.

How Will’s thoughts differ from other prominent EAs

Compared to perhaps most of the EA community (or at least the more prominent members):

- Most EAs are already very committed to growing the EA community, but Will thinks he’s even more in favour than most. Promoting values is especially important to Will.

- Will is also very concerned about preserving and promoting liberal democracy. Trying to do things that would help move away from, say, Hindu nationalism and some more authoritarian trends in India and the US could be very important.

- Will’s estimates for extinction risk are lower than Toby Ord’s, and other people in the community [Will says existential risk here but I think he’s actually referring to extinction risk.]. The reason is that he thinks society is really messy and contains some self-correcting mechanisms. Plus there’s a big asymmetry in that most people generally act rationally in their own self-interest and don’t want to cause a major catastrophe. But when there is a war, all bets are off. For example, the USSR biological weapons programme employed 60,000 people devoted to trying to find the nastiest possible viruses, even though it ended up probably being bad for the USSR itself.

- He believes he is more concerned by war than perhaps most others are – he puts the chance of a great power war in our lifetimes, like a WWIII, as being about 40%, which is kind of the base rate. [I’m not sure how they’re calculating the base rate, though.] When

Other Interesting Points

- It’s commonly said that the Dvorak keyboard is better than the QWERTY keyboard but Will couldn’t find any good arguments for this. The arguments came from Dvorak himself.

- The first computer science pioneers, Alan Turing and I. J. Good, pretty much understood AI risks immediately back in the 1950s and early 1960s. They didn’t have all the arguments worked out but they got the key ideas that:

- artificial beings could be much smarter than us;

- there’s a question about what goals we should give them;

- they are immortal because they can replicate themselves; and

- there could be positive feedback loops, where they improve themselves better than we could.

- The podcast briefly talks about population ethics but says it’s a really difficult area and they won’t have time to get into it. (The argument for longtermism doesn’t really depend on it, either.) Will calls it “one of the hardest and most complex and challenging areas of moral philosophy”. [This made me feel better as I’ve definitely struggled with it, and remain unconvinced of the Total View.]

- There’s some discussion of the overkill hypothesis – i.e. that the reason most megafauna (large mammals) are in Africa is because the ones in other parts of the world were killed off by humans. [The overkill hypothesis is referred to in both Guns, Germs and Steeland Sapiens.]

- Some interesting animals he mentions are the glyptodonts (giant armadillos), Megatherium (a giant ground sloth) and Haast’s eagles (giant eagles that hunted moa, also a giant – but flightless – bird).

- Will also mentions the other Homo species that Sapiens largely killed off or out-competed. [Also discussed in Sapiens.] It’s possible our morality would have been quite different with more than one human species. In particular, humans are often regarded as being “above” other animals [for example, most people regard human life as sacred, but wouldn’t say the same for animal lives]. Perhaps that would not have happened if there had been two human species.

- A lot of people hate the idea that humans killed off megafauna, and prefer to blame climate change. Will says this was the issue they got the most heat on in What We Owe the Future, but he really thinks that the evidence for it is very, very strong. [For what it’s worth, Diamond and Harari both agree with Will.]

- Humans are actually very strange physically. We are one of only two species that can sweat (the other being horses). We are also very good long-distance runners. This was how early humans hunted animals – they ran after prey like zebra slowly, over the course of hours, until the zebra collapses from heatstroke and exhaustion.

My Thoughts

I’ve always liked Will. He just comes off as such a reasonable and grounded guy. Longtermism has been hard for me to “swallow”, partly because it has the potential to lead to some weird conclusions. But Will does an excellent job of making it sound more reasonable. Another thing I enjoyed was hearing about how Will also took quite a while to come round to longtermism.

I was sympathetic to Will’s point that we should focus more on longtermism, even if we don’t accept it’s the number one most important thing to devote our resources to. The idea of robust EA was also appealing. Previously, I’ve expressed scepticism that the future is good in expectation, and have been concerned that longtermism could imply we should prioritise building bunkers over helping poor people today. I was glad to see Will addressed them as valid concerns and didn’t just handwave them away. While I still disagree with his reasons for why the future trajectory looks good (explained below), I can easily get behind causes like clean energy and reducing the risk of war.

The podcast raised a lot of concerns I’ve had about longtermism. It did not address all of them to my satisfaction, but it did make me more open to longtermism and want to learn more about specific causes.

A question about which I remain unconvinced is whether the future is good in expectation. I am highly uncertain on this point and, while I have not thought about it as much as Will seems to have, I found his reasoning unpersuasive. In particular:

- It makes sense to view altruism as positive and sadism as negative, but I don’t think indifference equates to 0 as Rob and Will imply. By “indifference”, they really seem to mean “selfish”, and the latter has more negative connotations than the former. Pure indifference or selfishness may well end up as negative overall (though obviously not as negative as sadism), because negative externalities exist. I think the vast majority of agents in the world are largely indifferent, and only a few are altruists, particularly if you include non-human beings in the calculation. The world could still be a net negative in this case.

- There’s also a question about the distribution of power. Even if the impact of indifferents really were 0, and even if altruists and indifferents outnumber sadists, sadists may have more power and more ability to affect the world negatively. It seems plausible there is a positive correlation between having power and being sadistic. Put another way, there could be a negative correlation between having power and being altruistic. This may be because:

- Altruistic people would be more inclined to give away money and power than sadistic or selfish people; and

- Obtaining power sometimes requires morally dubious acts that altruistic people may not want to do.

- (On the other hand, I think that people tend to be more altruistic once they have a certain level of wealth and power. It’s like Maslow’s hierarchy of needs – once your basic needs are accounted for, you can focus on helping others.)

- People are also not entirely altruistic, entirely sadistic, or entirely selfish. Even someone who identifies as an altruist (effective or otherwise) will be selfish to some degree, and their selfish acts may have larger impacts than their altruistic ones (perhaps more so for non-effective altruists who are less focused on impact). It’s therefore possible for altruistic groups to outnumber sadistic groups, but for sadistic acts to outnumber – or out-impact – altruistic acts.

Though it was just a small part of the podcast, a highlight for me was Rob’s question about whether it would be better to promote longtermism by appealing to people’s self-interests. I loved Will’s response to it. It really emphasised that EA is, and should be, about trying to do what we think is best at any given time, but that our view of “what is best” may change over time. That kind of attitude – that humility and openness to new ideas and information – is much more attractive than a community that just says “X is what is best, trust us and do what we say.” Unfortunately I think it’s very easy for people to come away with the latter message (especially when filtered through mainstream media or even just young, overly enthusiastic EAs).

Rob and Will have great chemistry in this podcast, and it’s clear they get along well. Overall it was a much easier listen (and easier summary) than my first attempt at a podcast summary. I am thinking of doing a follow-up summary of this EconTalk podcast with Will, where the interviewer is much less sympathetic to longtermism and EA.

What do you think of my summary above? Did you find Will’s arguments compelling? And do you think summaries for such long podcasts make sense, or do you find the idea of a podcast summary a bit weird? Let me know in the comments below!

If you liked this summary, you may also enjoy the following: